As data becomes a strategic asset, many businesses face significant challenges in managing and extracting value from their data. The lack of an efficient processing and management system can lead to inaccuracies in analysis, poor decision-making, and reduced operational efficiency. Here are five major issues that businesses often encounter in managing their data:

- Distributed and inconsistent data: Data is stored across multiple systems, making it difficult to consolidate and synchronize. The absence of a unified data repository complicates analysis and leads to inaccurate decision-making.

- Poor data quality : Raw data often contains errors, duplicates, or missing information. This results in skewed analysis and incorrect decisions, negatively impacting business operations.

- Lack of real-time analytics : Many businesses struggle to collect and process data in a timely manner. They rely on outdated reports and are unable to respond quickly to market or business changes.

- Difficulty applying AI and Machine Learning : Despite having vast amounts of data, businesses lack the tools and platforms needed to apply AI algorithms, predictive analysis, and data optimization to derive higher value.

- Challenges in data visualization : The complexity and volume of data make it hard to transform into clear, understandable reports. This limits managers' access to critical information and affects strategic decision-making.

Executive Summary

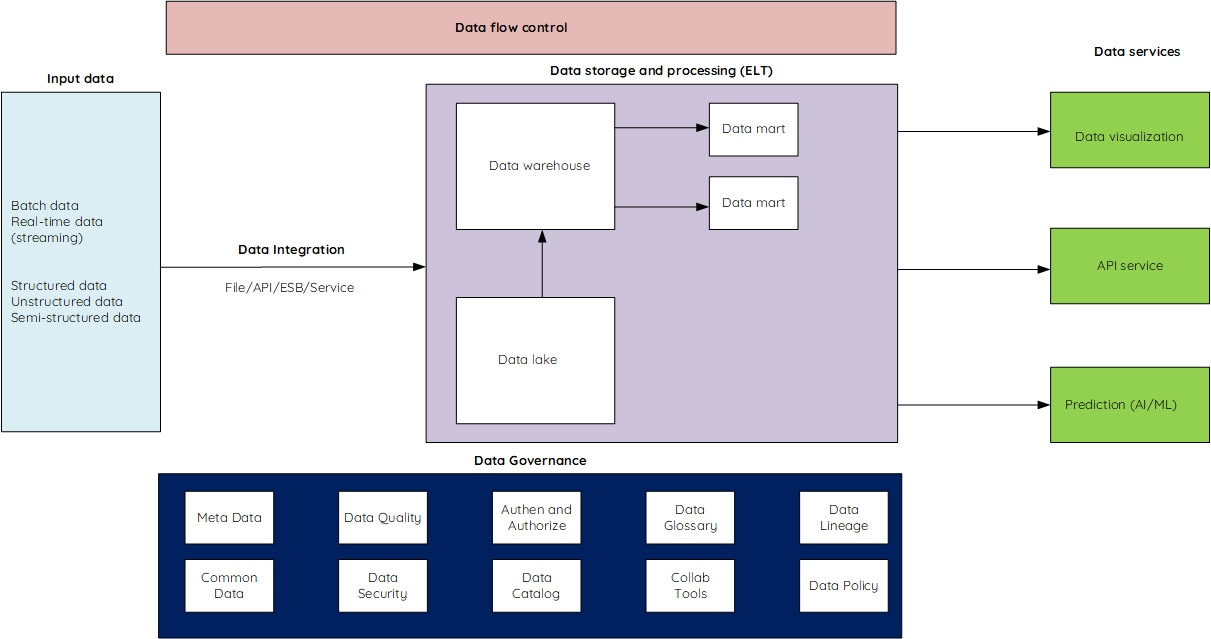

Our data management and analytics solution leverages a comprehensive Modern Data Stack (MDS) architecture to enable seamless integration, storage, processing, and analysis of data from disparate sources. The Data Integration layer facilitates the ingestion of both batch processing and real-time streaming data, accommodating structured, unstructured, and semi-structured data formats.

Once ingested, data is stored in a centralized data repository, which may utilize Big Data frameworks (Hadoop) or a Data Warehouse (PostgreSQL) depending on the input data requirements. Data can be ingested via file transfer, APIs, database services, or through an Enterprise Service Bus (ESB) architecture, providing flexibility in data acquisition. The primary data integration tools include Apache Nifi and Apache Kafka, ensuring robust and scalable data pipelines for high-throughput ingestion.

Stored data undergoes data cleansing, standardization, and ELT (Extract, Load ,Transform) processes using Dbt (Data Build Tool) to ensure data quality and consistency. Processed data is then routed to Data Marts to support BI (Business Intelligence) tools such as Power BI and Tableau for advanced visualization, enabling stakeholders to generate actionable insights. Additionally, a portion of the data can be shared via an Open Data Portal (CKAN) for broader access to external entities.

Furthermore, both raw and processed data can be utilized as inputs for AI/ML (Artificial Intelligence/Machine Learning) models to enable predictive analytics and deep learning applications. This allows businesses to extract actionable insights from historical data and forecast future trends. The solution also integrates a comprehensive data governance framework that includes data cataloging, metadata management, data quality assurance, centralized identity and access management, glossary management, data lineage tracing, data security, data policies, and collaborative data exploitation, ensuring full lifecycle management and compliance.

Advantages

Specialist

Clients

Technology

Number of projects

Service Architecture

Data Integration:

- Multi-source data integration: Facilitates seamless integration from various data sources such as ERP systems, CRM platforms, relational databases, APIs, and flat files.

- Hybrid batch and real-time processing: Supports both batch processing and real-time (streaming) data ingestion, catering to diverse enterprise data pipelines.

- Advanced integration tools: Leverages enterprise-grade tools like Apache Nifi and Apache Kafka to ensure robust, scalable, and fault-tolerant data integration.

Data Storage:

- Versatile storage technologies: Supports both Big Data architectures such as Hadoop and relational Data Warehouses like PostgreSQL, depending on the data storage and analytics requirements.

- Cost-performance optimization: Ensures efficient, high-performance data storage with cost-effective solutions tailored to business needs.

- Enterprise-grade data security: Implements multi-layered security protocols to protect data assets from external threats while enabling secure role-based access control for internal users.

Data Transformation:

- Comprehensive data cleansing and normalization: Cleanses raw data by removing duplicates and errors, ensuring that data is standardized and optimized for analytical use.

- ELT (Extract, Load, Transform) paradigm: Utilizes the ELT model to extract, load, and transform data within scalable environments, providing enhanced flexibility compared to traditional ETL.

- Dbt for automated transformation: Employs Dbt (Data Build Tool) to automate complex data transformation workflows, ensuring that data is optimized for reporting and analytics processes.

Open Data Portal:

- Data management and publication : CKAN provides comprehensive data management features, allowing organizations to easily publish, store, and share open data. Datasets can be organized by topics, formats, and sources, enabling users to search and access data quickly. CKAN supports multiple data formats such as CSV, JSON, and XML.

- Powerful API and integration capabilities : CKAN comes with an open API that enables developers to access, search, and utilize data automatically. This makes it easy to integrate CKAN with other data systems or build data-driven applications. Additionally, the API supports both uploading and downloading of data, extending the platform's functionality.

- Data analysis and visualization features : CKAN has the ability to integrate with data visualization tools such as DataStore or external analytics platforms. Users can create dashboards, charts, and reports from datasets directly within the platform, making data exploration easier and more valuable for strategic decision-making.

Data Visualization:

- Advanced integration tools: Integrates with industry-leading BI tools such as Power BI and Tableau, allowing users to easily build interactive, data-driven dashboards and analytics reports.

- Real-time, dynamic reporting: Facilitates real-time data visualization and reporting, enabling stakeholders to make informed, data-driven decisions on the fly.

- Highly customizable dashboards: Offers flexible customization options for dashboards and reporting views to meet specific business objectives and KPIs.

Data Governance:

- Data Catalog Management: Establishes a centralized, enterprise-wide data repository that enables efficient data discovery, governance, and access. The Data Catalog provides users with streamlined access to datasets, ensuring comprehensive metadata tagging, effective data organization, and optimal accessibility for data stakeholders.

- Metadata Management: Facilitates the governance of detailed metadata, encapsulating the data’s attributes, provenance, and transformation history. Robust metadata management ensures transparency and traceability, allowing users to fully comprehend data lineage, context, and structure.

- Data Quality Assurance: Implements rigorous data quality frameworks for continuous monitoring, validation, and enhancement of data integrity. Automated data quality tools identify discrepancies in accuracy, completeness, and consistency, ensuring that high-quality data underpins business intelligence and analytics initiatives.

- Centralized Authentication and Access Control: Enforces role-based access controls (RBAC) and centralized identity management to safeguard data assets. This ensures that only authorized personnel have access to specific data sets, reinforcing compliance with internal data governance and regulatory requirements while minimizing unauthorized access.

- Data Glossary Management: Establishes a comprehensive enterprise-wide glossary of standardized data definitions, enabling consistent usage of key terms across departments. A well-maintained data glossary ensures alignment of data interpretation and usage, enhancing communication and reducing ambiguity across the organization.

- Data Lineage Tracking: Provides a detailed audit trail that tracks the full lifecycle of data from ingestion to transformation and utilization. Data lineage tools ensure end-to-end visibility into data flow, enabling root cause analysis, regulatory compliance, and optimized data governance practices.

- Data Security and Policy Compliance: Integrates stringent data security protocols and governance policies to protect data assets. Ensures adherence to internal and external regulatory frameworks, safeguarding sensitive information and maintaining compliance with enterprise-wide security standards.

- Collaborative Data Exploitation: Facilitates cross-functional and inter-departmental collaboration on data utilization, allowing teams to share, analyze, and leverage data assets for strategic decision-making. The governance platform promotes efficient data sharing and collective insight generation, driving innovation and operational excellence.